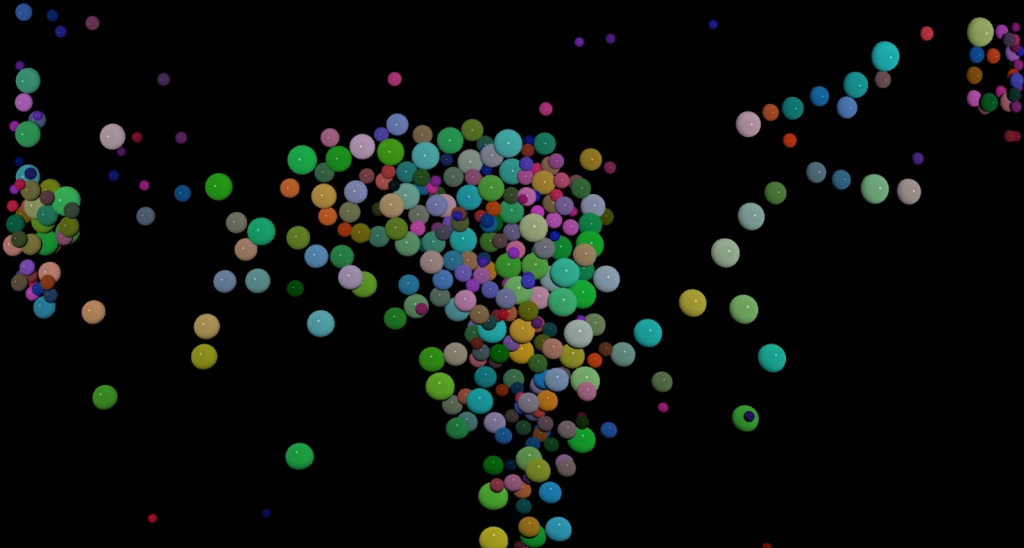

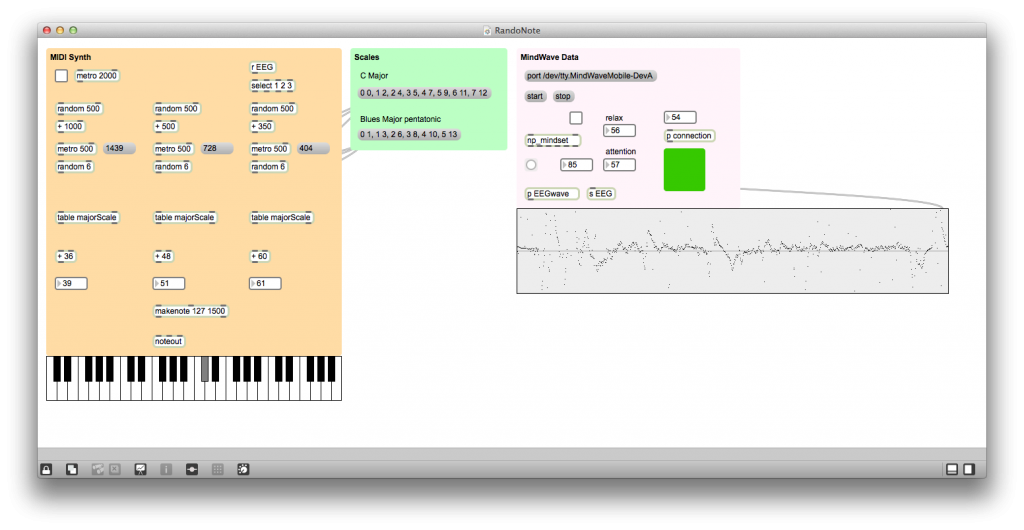

Facebook Mosaic 2.0: Painting with social data

Facebook Mosaic is a platform I developed to allow people to create art using their social data. My current work is highly focused around capturing and visualizing social data to provide utility to the masses. We upload an extensive amount of data to our social…

Read More